Open path wb as f. Write a single file to HDFS.

The python client library directly works with HDFS without making a system call to hdfs dfs.

How to write file to hdfs in python. Enable return elapsed def. 13092017 readwrite hdfs files with standalone python script. HDFS mkdir commandThis command is used to build a latest directory.

You can use the below command to do so. Jar -input myInputDirs -output myOutputDir -mapper myPythonScript. Liste_world with client_hdfswrite userhdfswikihelloworldcsv encoding utf-8 as writer.

Py -file myPythonScript. You need to create new files each time you want to dump files. Read size def ensemble_average runner niter 10.

03012017 HDFileSystem localhost port 20500 user wesm hdfs. With open filename as f. Snakebite is one of the popular libraries that is used for establishing communication with the HDFS.

05102016 Youre facing that error because HDFS cannot append the files when written from shell. Randint 0 size return f. Df pdDataFrame 1 10 a columns x y z dfto_hdf storeh5 data answered Oct 17 2020 by MD.

I have some python standalone files which acces data through the common command. Using the python client library provided by the Snakebite package we can easily write python code that works on HDFS. The client then directly interacts with the DataNodes for writing data.

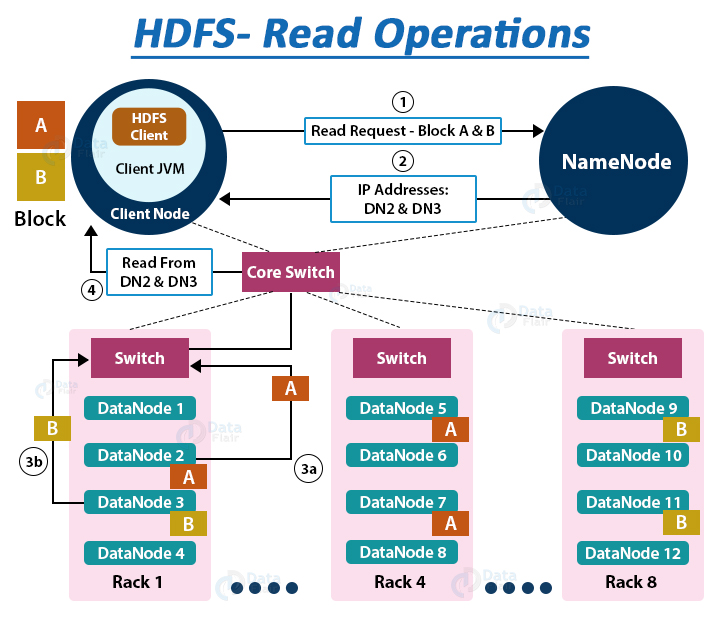

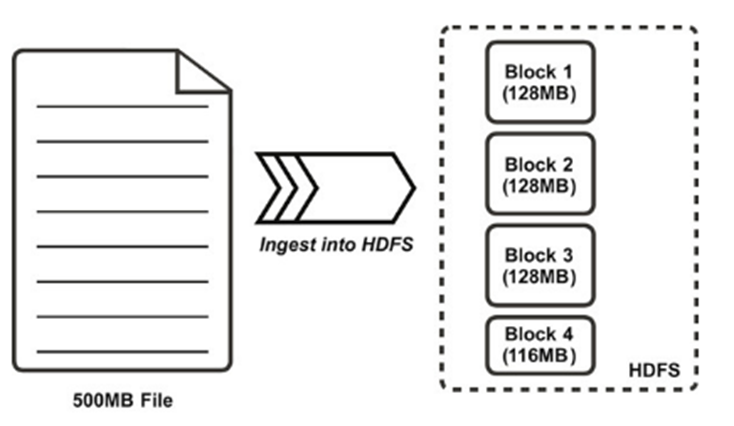

It uses protobuf messages to communicate directly with the NameNode. Clock -start niter gc. To write data in HDFS the client first interacts with the NameNode to get permission to write data and to get IPs of DataNodes where the client writes the data.

I want make the python scripts able to run without changing too much of the code and without dependencies if possible. It uses protobuf messages to communicate directly with the NameNode. 04042017 Please check this article.

A better way to do this would be to use a python HDFS client to do the dumping for you. Namenode provides the address of the datanodes slaves on which client will start writing the data. For example in the python code you can tell where is your core-sitexml hdfs-sitexml present.

HDFS put commandThis command is used to move data to the Hadoop file system. 17022020 Writing a file on HDFS Creating a simple Pandas DataFrame liste_hello hello1hello2 liste_world world1world2 df pdDataFramedata hello. Hdfs dfs -put userstempfiletxt This PCDesktop HDFS ls commandThis command is used to list the contents of the present working directory.

This basically creates a map-reduce job for your python script. 17102020 Hierarchical Data Format HDF is a set of file formats HDF4 HDF5 designed to store and organize large amounts of data. 27052020 Run Hadoop ls command in Python ret out err run_cmdhdfs dfs -ls hdfs_file_path lines outsplitn Run Hadoop get command in Python ret out err run_cmdhdfs dfs -get hdfs_file_path local_path Run Hadoop put command in Python ret out err run_cmdhdfs dfs -put local_file hdfs_file_path Run Hadoop copyFromLocal command in Python ret out err run_cmdhdfs dfs -copyFromLocal local_file hdfs_file.

Write data def read_chunk f size. HADOOP_HOME bin hadoop jar HADOOP_HOME hadoop-streaming. The Snakebite doesnt support python3.

Append runner elapsed time. Dfto_csvwriter Reading a file from HDFS. I can recommend snakebite pydoop and hdfs packages.

19102016 df pdDataFrame data hello. To write a file in HDFS a client needs to interact with master ie. 11102020 Using the Python client library provided by the Snakebite package we can easily write Python code that works on HDFS.

06122018 from subprocess import Popen PIPE. Do a random seek f. Hope this helps -.

For lines in f. Client can directly write data on the datanodes now datanode will create data write. Delete path path tmptest-data-file-1 with hdfs.

Hdfscli upload --alias dev weightsjson models Read all files inside a folder from HDFS and store them locally. I havent tried the third one though so I cannot comment on them but the other. Hdfs dfs -put local source.

Disable data_chunks for i in range niter. Hdfs dfs mkdir directory_nam. Liste_world Writing Dataframe to hdfs with client_hdfswriteuserhdfswikihelloworldcsv encoding utf-8 as writer.

It can be any path where these files are present. 08102020 Python can also be used to write code for Hadoop.

Hadoop Hdfs Commands Cheat Sheet Credit Linoxide Data Science Learn Javascript Cheat Sheets

Hadoop Vs Spark How To Choose Between The Two Machine Learning Tools Big Data Hadoop Spark

Chapter 10 Installing Hue Hortonworks Data Platform Data Installation 10 Things

Read And Write Files In Hdfs On Eclipse Programmer Sought

Data Read Operation In Hdfs A Quick Hdfs Guide Dataflair

Big Data Dengan Hadoop Memasukan File Ke Hdfs Part 4 By Farhan Medium

0 comments:

Post a Comment